Video File Formats

Video files are collections of images, audio and other data. The attributes of the video signal include the pixel dimensions, frame rate, audio channels, and more. In addition, there are many different ways to encode and save video data. This page outlines the key characteristics of the video signal, and the file formats used to capture, work with, and deliver that data.

What’s a file format?

A file format is the structure in which information is stored (encoded) in a computer file. When dealing with video, a large amount of data is required to depict a video signal accurately, this information is often compressed and written into a container file. This section outlines digital video file formats: what they are, the differences between them, and how best to use them.

There are many different formats for saving video, which can be confusing. Some formats are optimal for capture of video, and some are best used for editing workflow.

And there are quite a few video formats that are used for delivery and distribution of video.

It’s a jungle out there

Video files are significantly more complex that still image files. Not only is there a lot more different kinds of information inside them, but the structure of a video file is much more “mix-and-match”. You can tell a lot about most still image files by the file extension, but that does not hold for video. The file type (such as .MOV) is just a container, and could be filled with really low quality web video, or it might have ultra-high-quality 3-D video and five channels of theater-quality audio.

As photographers, we’ve become accustomed to Photoshop’s ability to open nearly any image file and work with it. The digital video world depends on hundreds of different codecs to make and play files. And just because codecs make files with the same encoding family – such as H.264 – does not mean that they can be played interchangeably. Yikes.

And yet, it is possible to learn enough to get your work done, once you understand some of the basic structural elements. Let’s dive in, starting from the outside and peel back the layers.

The anatomy of a video file

While there is a wide range of video formats, in general, each format has the following characteristics.

- A container type: AVI and Quicktime MOV are two examples of container types.

- The video and audio signal: This the actual video and audio data, which has characteristics described in the next section.

- A Codec: Codec refers to the software that is used to encode and decode the video signal. Video applications use a codec to write the file and to read it. It may be built-in to the program, or installed separately.

The characteristics of a video signal

Every video file has some attributes that describe what makes up the video signal. These characteristics include:

- Frame size: This is the pixel dimension of the frame

- The Aspect Ratio: This is the ratio of width to height

- Frame rate: This is the speed at which the frames are captured and intended for playback.

- Bitrate: The bitrate or data rate is the amount of data used to describe the audio or video portion of the file. It is typically measured in per second units and can be in kilobytes, megabytes or gigabytes per second. In general, the higher the bitrate, the better the quality.

- The audio sample rate: This is how often the audio is sampled when converted from an analog source to a digital file.

File Format “Container”

All file formats are structures for bundling up some type of data. And nearly every computer file has more than one type of data inside the file. Video files are particularly complex internally, but they all need to be stored in one of the basic container types. Within a particular container type, such as Quicktime or AVI, there can be a vast difference between the video signal attributes, the codecs used, and the compression.

In some ways, this is similar to the large number of raw file formats for still photography. You may have two files with the file extension CR2, but that does not mean they are equivalent. The “guts” of each of the files may have different resolutions, for instance, and a piece of software that can open one of these may not be capable of opening the other one.

Container extensions include MOV, AVI, FLV, MP4, and MXF.

What’s in the container?

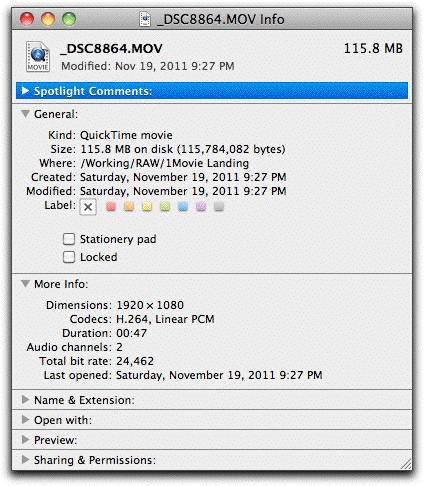

If you can’t tell much about the file from its file extension, how do you know what’s in there? Your system software may tell you part of the story. On Mac, select the file and go to File>File Info. You can see some of the file attributes in the “General” and More Info” sections, as shown in Figure 1. On PC, select the file and right-click and select Properties.

FIGURE 1 Your OS can tell you something about a video file. Here is the Mac file info from a Nikon D7000 video file.

Additionally, there are freestanding utilities that may give you even more information.

- If you are on PC, then a program called GSpot can provide additional information. http://www.headbands.com/gspot/

- MediaInfo is a cross-platform program that can also provide some information about a video file beyond what the OS tells you.

Codecs

Inside every video file container is the video and audio data. This data is created by a piece of software called a codec, short for compressor/decompressor (or compress/decompress). You can think of codecs as little helper applications that the program or the operating system uses to make or play the video file. Without the proper codec, a video file can’t be played by a computer.

Video codecs are often proprietary and may involve additional licensing fees. Video editing software (and your operating system) will always come with at least a few built-in codecs, and will usually allow you to install others.

How are codecs different from containers?

This one gets a bit tricky for photographers to understand, since it’s pretty different from the way still image files work. The file format container (such as .MOV or .AVI) is a way to pack up the data for storage. The codec (such as H.264) is the method for actually encoding and decoding the video and audio signal inside the container.

It is possible, for instance, to take an MTS file created with the H.264 codec, and turn it into an MOV file by simply “rewrapping”. During the rewrap process, only the small bits at the start and end of the file are changed, while the vast majority of the file data stays exactly the same.

Codec compatibility

The large number of codecs in use make video compatibility a very complicated arena. You can’t tell what codec is used by the file extension, and your system software may only give you partial information. Your video editing software may be able to tell you what codec was used to make the file, or you may need to get some specialized software.

H.264 codec

One of the most versatile codec families in use today is H.264. (H.264 is also called MPEG-4 Part 10 and AVC). It offers excellent compression with high quality, and it is extremely versatile. When H.264 is used with a high bit rate, it provides really excellent quality, as you will see if you play a Blu-ray disc. And it’s also useful when compression is the most important feature. It is the codec used by web streaming by services such as Vimeo.

In addition to a great quality/compression ratio, H.264 offers a tremendous amount of flexibility as to the video and audio signal characteristics. It can support 3-D video if you wish, as well as a number of different audio encoding schemes.

In one variation or other, H.264 is supported by many different devices and services. Among these are:

- Blu-ray disc

- Apple iPhone, iPad and iPod

- Canon cameras like the 5D, 7D, and 1Ds Mark IV

- Nikon cameras like the D7000, D4 and D800

- Vimeo and YouTube web services

- Sony Play Station Portable

- Microsoft Zune

- The Adobe Flash and Microsoft Silverlight players

- Decoding for H.264 video is now built in to the Intel’s Sandy Bridge i3/i5/i7 processors, which should enable even faster workflow with the format

Container formats that support H.264

One reason that H.264 is so popular is that it works with so many container types. Among the types that support H.264 encoding are:

- MOV

- AVC, AVCHD

- MPEG

- Divx

Bit rate

The bit rate, or data rate of a video file is the size of the data stream when the video is playing, as measured in kilobits or megabits per second (Kbps or Mbps). Bit rate is a critical characteristic of a file because it specifies the minimum capabilities of the hard drive transfer rate or Internet connection needed to play a video without interruption. If any part of the playback system cannot keep up with the bit rate, the video will stutter or stall.

Because bit rate describes how much data can be in the video signal, it has a vital impact on the video quality. With higher bit rates, a particular codec can have a larger frame size, higher frame rate, less compression per frame, more audio data, or some combination of each of these characteristics. If you need to reduce bit rate, then one or more of the video signal characteristics is going to have to be downgraded.

Higher bit rates can provide more information to describe the visual or audio data. Cameras typically record at a higher bit rate than can be optimized for delivery files to the Internet or optical disc. On the other hand, some video workflows transcode compressed video files to a new file format, which actually increases the bit rate.

Most software that can edit or transcode video will offer you the ability to set the bit rate for the new file. Sometimes this setting lets you specify a constant bit rate (this is how video is typically recorded by the camera). Sometimes this bit rate can be variable, offering a “target” for the software to try and hit, and a maximum allowable rate for the stream. This can produce files that are more optimized for delivery via the Internet or optical disc.

It’s difficult to make blanket statements about the relationship of bit rate to video quality because the rate is dependent on so many different factors, as outlined above. However we can provide some general guidelines for bit rate levels, using H.264 encoding for some popular uses. (Note that these are provided for general informational purposes, and we are not suggesting that you manually adjust these settings).

| 640×480 Internet video streaming | 700 Kbps |

| 1920×1080 Internet video streaming | 5 Mbps |

| Blu-ray disc | 40 Mbps |

In most cases, you should let your software’s preset assign the correct bit rate when you are transcoding or outputting new files.

Transcoding

Transcoding is the process of changing some part of a video file format to another type. If you change frame size, or bit rate, or codec, or audio signal, you are said to be transcoding a file. The process of transcoding is generally pretty time-consuming, and can often add a significant hurdle to the production process.

Transcoding also introduces the possibility of errors into the file, particularly if the video signal is being transformed in some significant way, such as a change in frame rate. For critical applications, it’s best to review all transcoded footage before discarding the original footage or releasing the new footage to a client. Of course, this makes the transcoding process take even longer.

If you can accomplish your workflow without transcoding, you’ll generally save yourself time, money and storage costs.

Rewrapping

In some cases, you can change a file’s format without transcoding. When you rewrap a file, you typically change the container format, but you don’t change the video or audio signal itself (in some cases, you might be making a change to the color space or audio portion of the file). Rewrapping a file is much quicker than transcoding, since most of the bits are simply copied to the new file, rather than processed in some way.

Raw video formats

While DSLR cameras will typically shoot a raw still photo, they don’t shoot raw video. Only a handful of cameras shoot raw video, and they are very expensive and require specialized workflow tools and very high-performance hardware. The two leading manufacturers in this market place are the Red Digital Cinema Camera Company and the Arri Group. These manufacturers produce cameras capable of shooting much larger frame sizes, and are a popular choice for high-end commercial production, network television, and feature films.

The footage shot by these cameras is called “raw” because they contain unprocessed (or minimally processed) raw data from an image sensor. Raw footage will also typically have a higher dynamic range than 8-bit. The higher dynamic range of raw footage allows for greater adjustment of the look of the footage in post-production, since there is more data to start with.

In most cases, the raw video files are converted to a compressed format during the postproduction stage of a project, much like raw still images must be converted to rendered files before they can be used in most workflows. However, manufacturers such as Adobe have added support for raw formats in a native workflow. In these cases, the computer used for editing is often accelerated to the hilt with additional memory, ultra-fast data storage and dedicated hardware to improve graphics processing.

Still plus video?

Raw video capture offers the promise of high-quality still images created directly from the video footage, typically called a “frame grab”. While these cameras offer excellent resolution and dynamic range, there is one problem that is difficult to surmount. Video footage is typically shot at shutter speeds between 1/50th and 1/60th of a second for normal motion imaging. This shutter speed is too slow to freeze most motion.

The slow shutter speed often makes frame grabs unsuitable for capturing a moving scene – precisely the type of subject matter for which a frame grab is most appealing.

Compressed video formats

With 24–60 frames recorded each second, a video signal by its very nature is going to be large. Compression is needed at several different phases.

In the Capture phase, the camera applies a constant bit rate compression algorithm to reduce the file size of the video being written to the memory card or hard drive. For example, a high definition video file recorded at 1920×1080 pixel at 30 frames per second is approximately 9GB per minute if it is uncompressed. The DSLR camera pre-compresses that file and discards information to achieve a file size of approximately 320 MB (or .3 GB) per minute.

In most cases, professionals apply some form of compression through the use of a video codec. Applying less compression results in a clearer source image, but an exponentially more expensive process due to increased costs associated with camera, storage media capacity, computer processing power for editing, and editing storage

Compression lowers bit rate

Video is compressed to lower the data rate and therefore the size of a file. The goal is to make the file size small while maintaining acceptable visual and auditory quality. Without compression, most video cameras and hardware would simply not be able to keep up with the needed data rates.

In practice you’re probably already familiar with compression. You encounter lossy compression when you take photos using JPEG formats. JPEG compression, using a variety of techniques, can make a single image much smaller in size but can result in a considerable loss of quality. Much like still image compression, video compression can take one of two forms: lossless and lossy.

- Lossless: Lossless compression makes a file smaller, but the decoded file is unaltered after decompression has taken place. The most common way lossless compression works is by using a scheme known as “run length encoding”. Lossless video codecs, such as the Animation codec (which is typically used for archiving or transferring files), have a low compression ratio. Usually, it’s no more than 2:1.

- Lossy: As the word implies, lossy compression means you lose some image, video, or audio information. Lossy compression is a bit tricky because good lossy compression is a balancing act between quality, data rate/file size, color depth, and fluid motion. Almost every video compression scheme is lossy. Lossy compression schemes like H.264 (used on Canon video DSLRs) can have compression ratios of more than 100:1.

With video workflows, compression can be applied (or removed) at several stages.

- Capture: A codec is used to compress the video and audio during acquisition. For example, the Canon 7D DSLR records video using the H.264 codec.

- Editing: Compression can also be added during ingestion or editing. You can add compression after download, but this is not part of most DSLR workflows, because the files are already highly compressed.

- Reduced compression editing: There are also workflows that rely on reduced compression during the editing process. In its 32-bit versions, Final Cut Pro typically requires transcoding files with the PreRes codec, which actually reduces compression in the file. (Note that 64 bit NLE software often makes transcoding unnecessary, saving time and storage space).

- Delivery: It is extremely common to compress video files for delivery. This allows the edit process to proceed with a high-quality version of the footage, and to apply compression only as the files are prepared for output for a specific purpose.

Format obsolescence

A major challenge with regard to the preservation of and access to video files is the long term readability of file formats. This is especially true if they are proprietary, which is increasingly becoming common. For this reason, many choose to create archive versions of the final program (and sometimes source files) using several different formats.